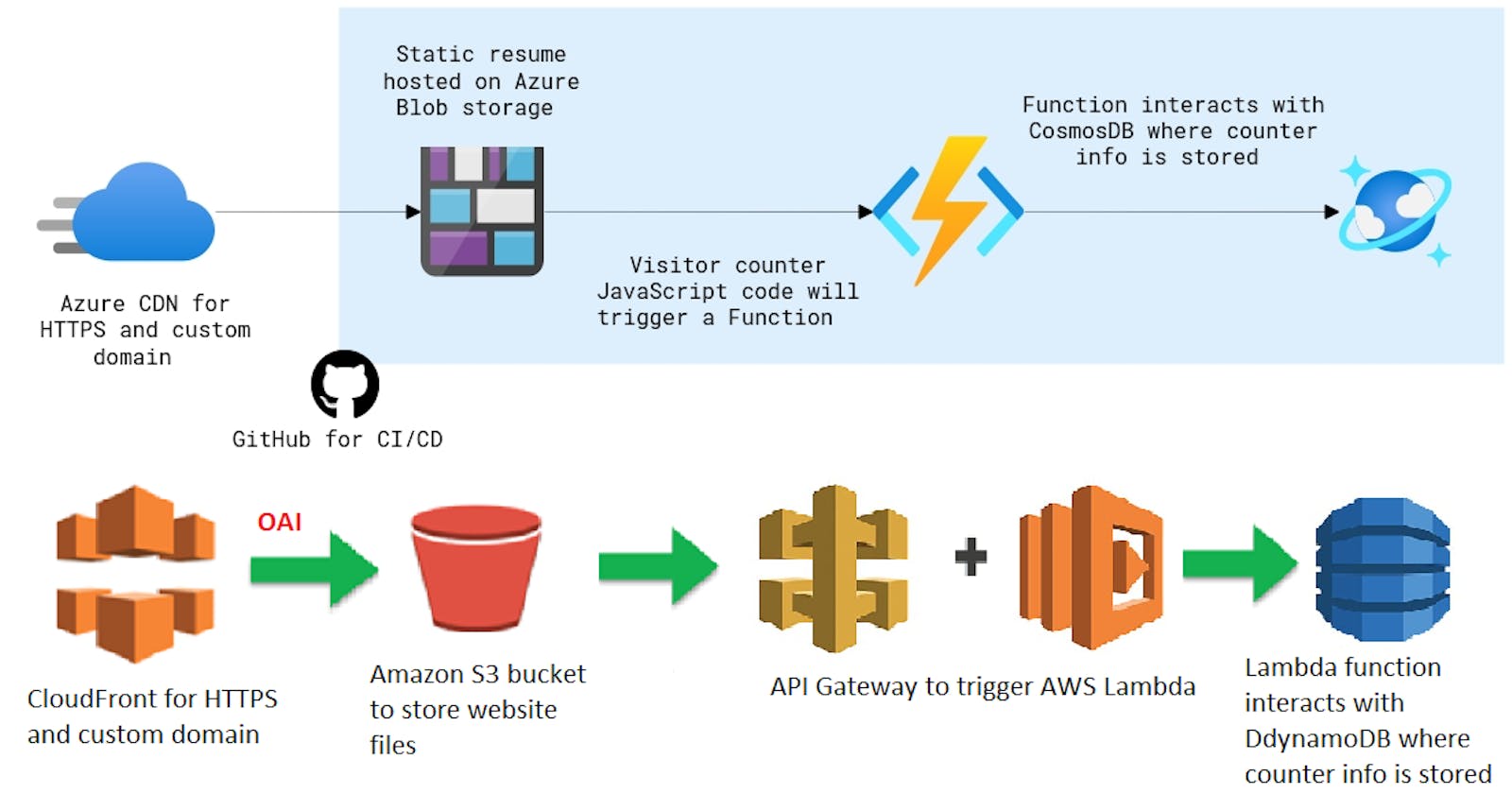

The goal of this challenge is to create your resume hosted on Azure or AWS. I recently achieved the AWS Solutions Architect certification and I was so excited about it that I decided to go for the SysOps exam. Learning for the exam, I came across this video on youtube talking about How many certs were needed to get a cloud job. You may have guessed it: hands-on experience and aptitude is your most important asset. That's the reason why I decided to take this challenge I first saw on A Cloud Guru.

I completed the challenge both on AWS and Azure.

Azure

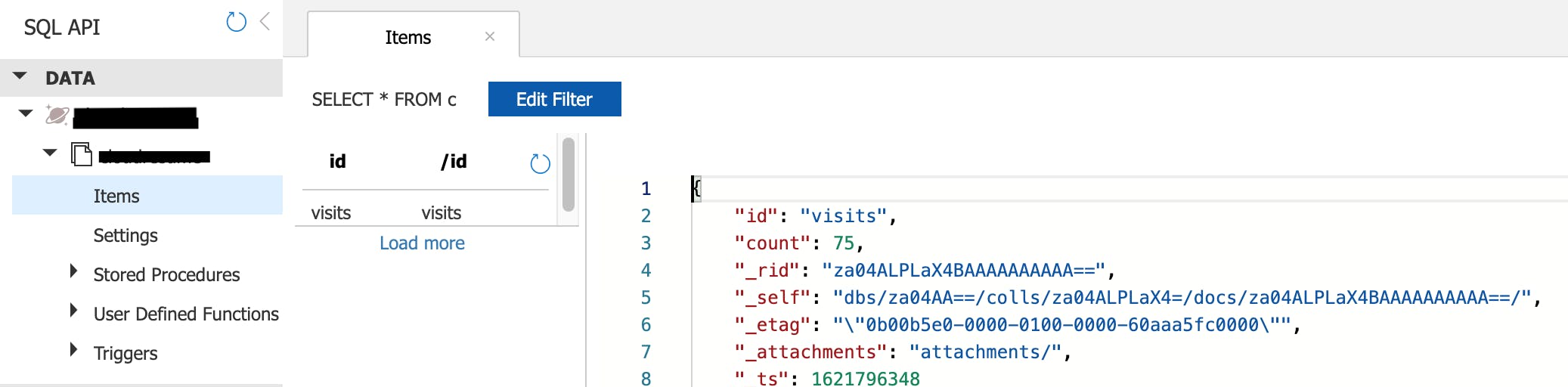

1- First created a CosmosDB account (SQL Core) with a container to store the site visits count

2- The second part was to create a Function to retrieve and update the value of the visits count. Decided to go with Python but I can say that this language is note really appropriate to use with Azure Functions. You have to create you package outside of Azure + not so many sample for CRUD operations on CosmosDB. Definitely had some difficulties here but eventually sort them out with this article. Bellow is a part of the code of the function I deployed to Azure using vscode.

def main(req: func.HttpRequest) -> func.HttpResponse:

logging.info('Python HTTP trigger function processed a request.')

getcount = container.read_item(item='visits', partition_key='visits')

getcount['count'] = getcount['count'] + 1

siteVisited = container.upsert_item(body=getcount)

#print('count: {0}'.format(response.get('count')))

counts = '{0}'.format(siteVisited.get('count'))

return func.HttpResponse (

status_code=200,

headers = {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': 'Content-Type,Date,x-ms-session-token',

'Access-Control-Allow-Credentials': 'false',

'Content-Type': 'application/json'

},

body = counts

)

This function returns only the count value. Don't forget to add the response headers in the return statement. One thing I like on Azure is the fact that your HTTP trigger functions come with a publicly accessible URL directly (as opposed to AWS where you have to separately create the API as a trigger for the function). Now you have to properly configure CORS for the function to be triggered by the API.

3- When I was sure that my function was working and returning the visits count value through GET method, now was the part for the frontend website. Downloaded a template from themezy and updated it with my resume information. At this point, the challenge was to write the JavaScript code to retrieve the count value. I did a lot of googling here. I knew I had to use the fetch API but I wasn't getting it to work. Finally came out with this code:

const url = 'your function URL';

fetch(url, {

method: "GET",

headers: {

"Content-Type": "application/json",

"Accept": "application/json"

},

})

.then(response => {

return response.json()

})

.then(response => {

console.log("Calling API to get visits counts");

nbvisited = response;

console.log(nbvisited);

document.getElementById('nbvisited').innerText = nbvisited;

})

.catch(function(error) {

console.log(error);

});

4- The next step was to create an Azure Blob storage setup as a static website. Then create a CDN with the blob storage as the origin and configure HTTPS with your custom domain. You need to look at the CORS settings of the blob storage as well.

5- CI/CD with GitHub

I have deployed my project to a GitHub repository and created workflows to update the frontend (update blob storage and purge Azure CDN) on push. From Azure functions App, you can configure CI/CD with GitHub as deployment center. You function will be updated on Azure when modified on GitHub. However, the created workflow doesn't include code testing (because I use Python). So I added a testing step in the workflow file: testing using nose2. If you use Python as well, make sure that your functions are importable as module otherwise nose2 will not pick them.

Workflow for backend

name: Test and Deploy function to Azure Function App

on:

push:

branches: [ main ]

paths:

- 'backend/**'

workflow_dispatch:

env:

AZURE_FUNCTIONAPP_PACKAGE_PATH: 'backend' # set this to the path to your web app project, defaults to the repository root

PYTHON_VERSION: '3.8' # set this to the python version to use (supports 3.6, 3.7, 3.8)

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- name: 'Checkout GitHub Action'

uses: actions/checkout@v2

- name: Setup Python ${{ env.PYTHON_VERSION }} Environment

uses: actions/setup-python@v1

with:

python-version: ${{ env.PYTHON_VERSION }}

- name: 'Resolve Project Dependencies Using Pip'

shell: bash

run: |

pushd './${{ env.AZURE_FUNCTIONAPP_PACKAGE_PATH }}'

python -m pip install --upgrade pip

pip install -r requirements.txt --target=".python_packages/lib/site-packages"

popd

- name: 'Test functions with Nose2'

shell: bash

run: |

pushd './${{ env.AZURE_FUNCTIONAPP_PACKAGE_PATH }}'

python -m pip install --upgrade pip

pip install nose2

pip install azure-functions

pip install azure-cosmos

python -m nose2

- name: 'Run Azure Functions Action'

uses: Azure/functions-action@v1

id: fa

with:

app-name: 'hpfgetresumecounter'

slot-name: 'production'

package: ${{ env.AZURE_FUNCTIONAPP_PACKAGE_PATH }}

publish-profile: ${{ secrets.AzureAppService_PublishProfile_a7603b82524748699547273836b49631 }}

6- Results

Visit my cloud resume on Azure here.

You can checkout my GitHub repository for this project.

AWS

I actually completed this challenge on AWS before doing it is Azure. I tend to prefer AWS as for me it more easily understandable. The steps are pretty much identical except for the better Python integration on AWS

1- Created a DynamoBD table to store my website visits count as item. Straight forward here, just give your table a name and specify the primary key. No need to set API and data model.

2- Created a Lambda function to update the visits count in DynamoDB table and return the value of the count. Also make sure to mention the appropriate headers in the return statement. You need to set an execution role for the function which has read and write access to your DynamoDB table.

import json

import boto3

dynamodb = boto3.resource('dynamodb')

def lambda_handler(event, context):

table = dynamodb.Table('NameofTable')

siteVisited = table.update_item(

Key={

'id': 'visits'

},

UpdateExpression='SET counts = counts + :val',

ExpressionAttributeValues={

':val': 1

},

ReturnValues="UPDATED_NEW"

)

getCount = table.scan()

counts = str(siteVisited['Attributes']['counts'])

return {

'statusCode': 200,

'headers': {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': 'Content-Type,X-Amz-Date,Authorization,X-Api-Key,X-Amz-Security-Token',

'Access-Control-Allow-Credentials': 'false',

'Content-Type': 'application/json'

},

'body': json.dumps(counts)

}

This function also returns only the count value.

3- Created an API Gateway trigger for the function. Make sure to give lambda:InvokeFunction permission for the function to the API Gateway. Also check CORS settings in case of issue.

4- From there, it is almost the same with Azure:

JavaScript code to fetch the API is identical. Just put the URL of the AWS API Gateway

Created an S3 bucket to store the website files. No need to set it as static website or publicly available

Created a CloudFront distribution with the S3 bucket as origin. Configure OAI to allow access to the bucket only from the CloudFront distribution (you have to create an identity)

Configured a custom domain with custom domain AWS managed SSL certificate for HTTPS

5- CI/CD with GitHub

I have uploaded my project to a GitHub repository and created workflows to update the frontend (update S3 bucket and invalidate CloudFront distribution) on push. Uploaded the Python function package to the backend folder of the repository and created a workflow file to test and deploy it to Lambda. Testing using nose2. If you use Python as well, make sure that your functions are importable as module otherwise nose2 will not pick them.

Workflow for backend

name: Test and Deploy to Lambda

on:

push:

branches: [ main ]

paths:

- 'backend/**'

jobs:

deploy_source:

name: Test and Deploy to Lambda

runs-on: ubuntu-latest

steps:

- name: checkout source code

uses: actions/checkout@v1

- name: 'Configure AWS credentials'

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: 'us-east-1'

- name: 'Test functions with Nose2'

run: |

pushd './backend'

pip install awscli

python -m pip install --upgrade pip

pip install nose2

pip install boto3

python -m nose2

- name: Deploy to Lambda

uses: appleboy/lambda-action@master

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: 'us-east-1'

function_name: HPFResumeVisitCounter

source: backend/HPFResumeVisitCounter/GetResumeCounter.py

6- Results

Visit my cloud resume on AWS here.

You can checkout my GitHub repository for this project.